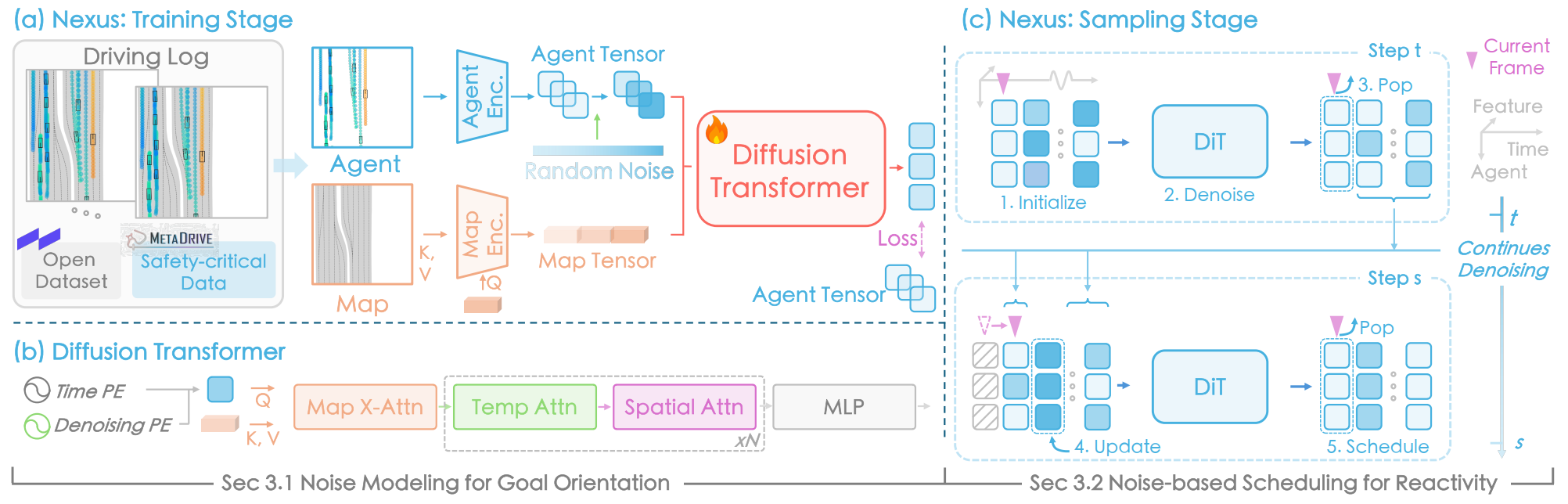

(a) Nexus learns from realistic and safety-critical driving logs and encodes agents and maps separately before feeding them into a diffusion transformer. The model is trained to restore sequences from partially masked agent tokens guided by low-noise ones. (b) Agent tokens are encoded with time and denoising steps, then interact with the maps and dynamics via attention. (c) Tokens with varying noise are scheduled within a chunk for a timely reaction. Each denoising step updates and pops zero-noise tokens, replacing them with next-frame tokens to iteratively generate the scene.

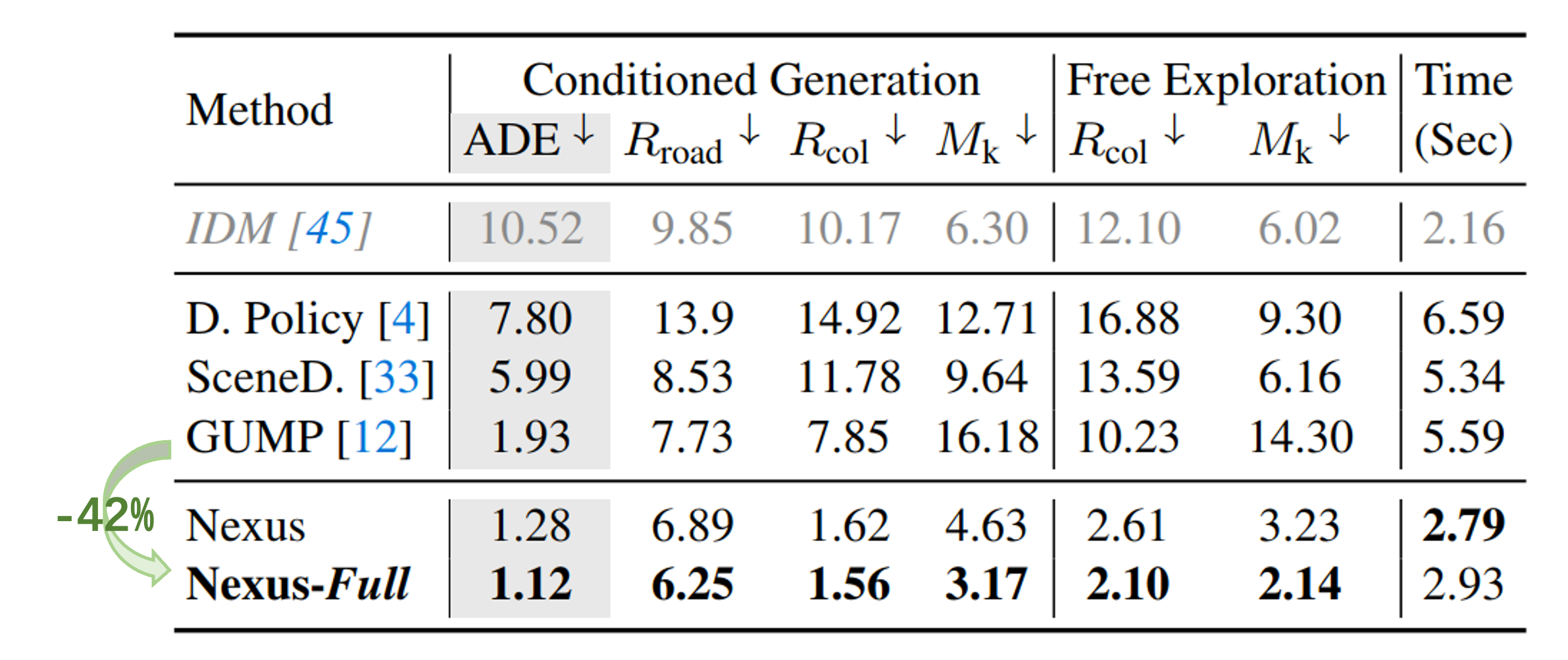

Generation controllability, interactivity, and kinematics compared to nuPlan experts. The tasks predict 8-second futures from 2-second history, with or without a goal.

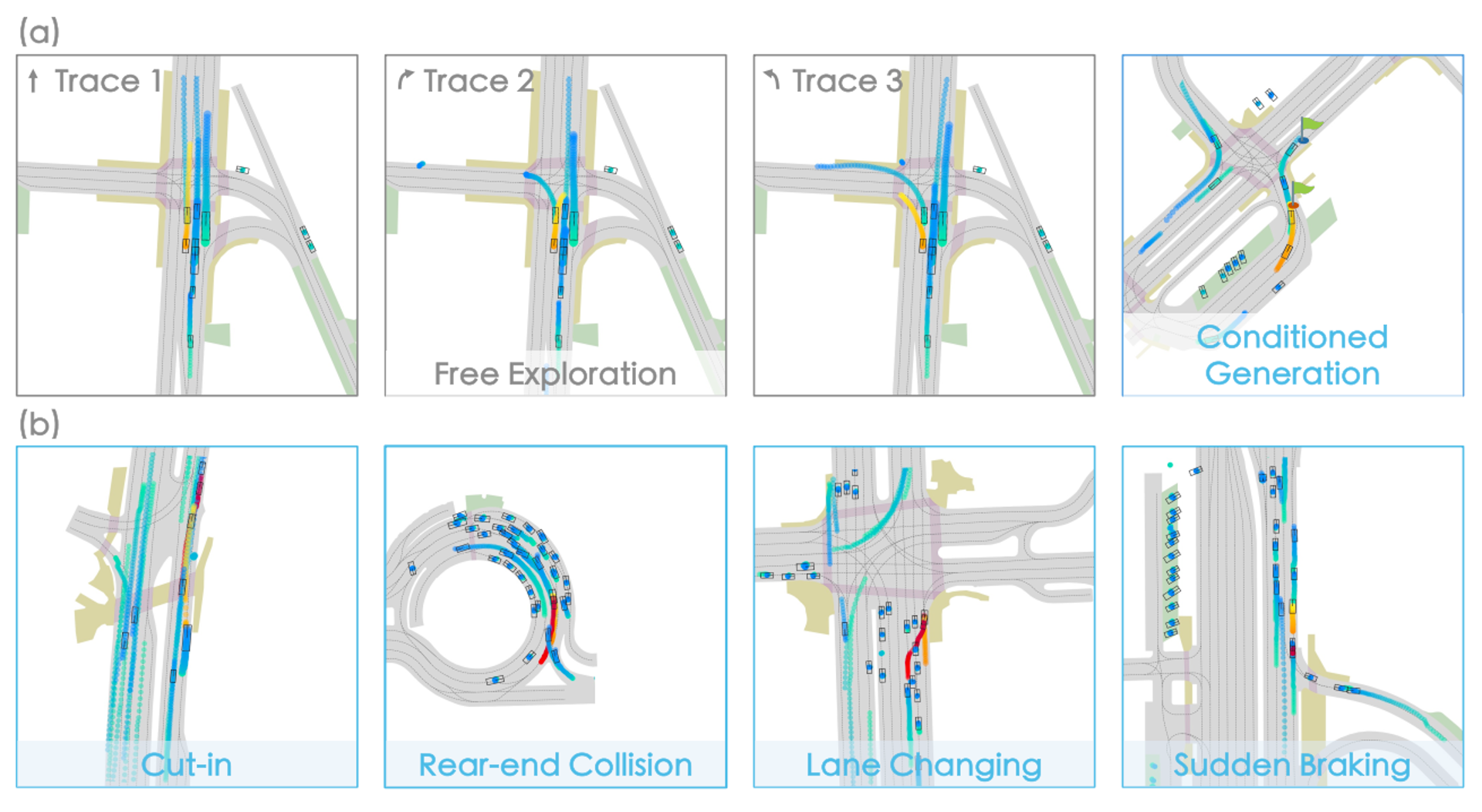

(a) Free exploration generates diverse future scenarios from initialized history, while conditioned generation synthesizes scenes based on predefined goal points. (b) Setting the attacker's goal as the ego's waypoint enables adversarial scenarios.

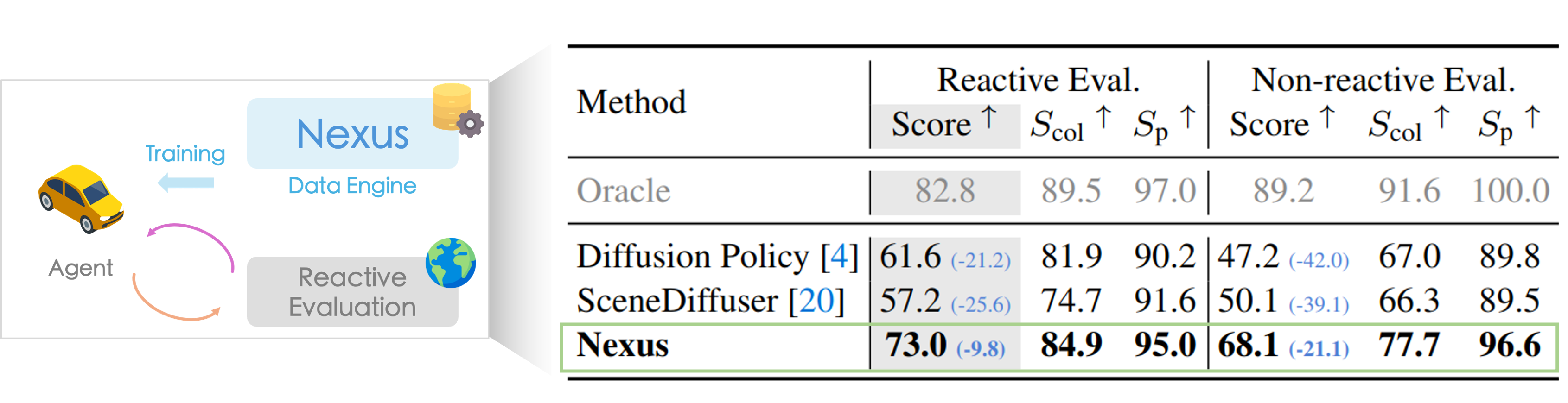

Evaluation of a generation model as a world generator. The scene generator serves as the interactive world model response to the baseline planner's actions, with nuPlan closed-loop metrics reflecting its realism

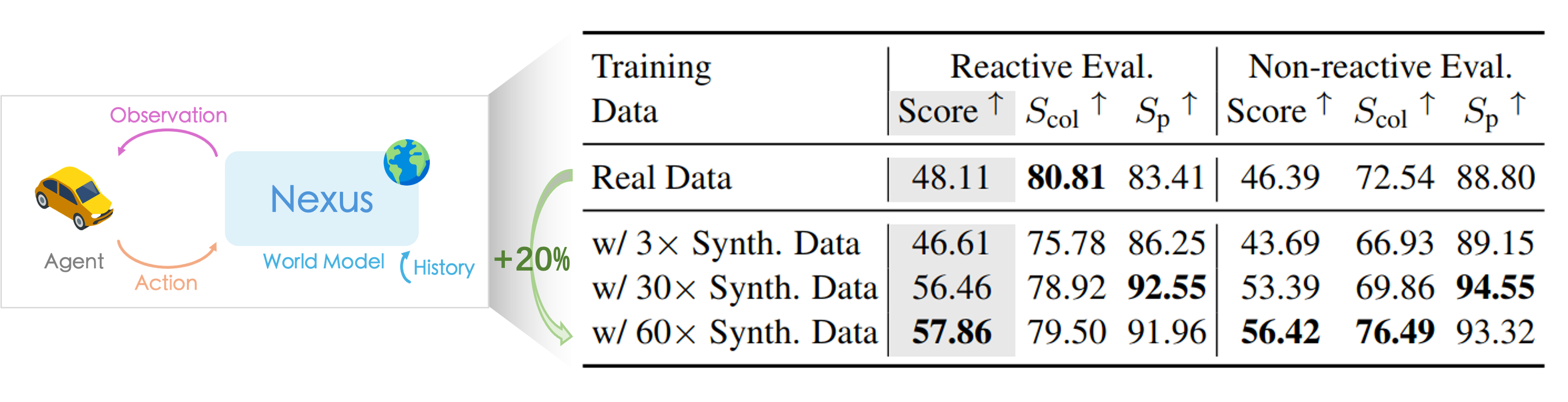

Comparison involving data augmentation using synthetic data. Nexus serves as a data engine, expanding sampled scenes to train the planner at varying scales. The nuPlan closed-loop evaluation demonstrates the performance gains from data augmentation

The code has been open-sourced. you can now try generating new scenes from the original nuPlan data on GitHub!

Please stay tuned for more work from OpenDriveLab: MTGS, Centaur and Vista.

@article{zhou2024decoupled,

title={Decoupled Diffusion Sparks Adaptive Scene Generation},

author={Zhou, Yunsong and Ye, Naisheng and Ljungbergh, William and Li, Tianyu and Yang, Jiazhi and Yang, Zetong and Zhu, Hongzi and Petersson, Christoffer and Li, Hongyang},

journal={arXiv preprint arXiv:2504.10485},

year={2025}

}