We are open-sourcing our generalist robotic foundation model, GO-1. Beyond the dataset and model innovations we shared in our previous blog, this time we'd like to talk about the bitter lessons we learned along the way.

One of the hardest lessons in robotics comes from the end-to-end pipeline: from hardware setup to data collection, from model training to real-world deployment. In deep learning, when accuracy issues arise, the natural instinct is to blame the model. This of course makes sense for embodied AI as well. If the predicted actions are not accurate enough, it seems obvious that robots would struggle to execute tasks reliably.

However, once these models are deployed as the robots' "brain", the reality looks different. Prediction accuracy only influences how well an action might succeed, a matter of percentages. The pipeline itself, especially the parts beyond algorithms or model design that are often overlooked by researchers, determines whether an action succeeds at all. This is a strict zero-or-one outcome.

Even if a team manages to squeeze out a 5% or 10% gain in model accuracy, the entire system can still fail if any part of the pipeline is broken. This is the bucket effect. A wrong coordinate frame, inconsistencies between data collection and execution, or even a minor hardware failure can cause the robot's actions to collapse completely. When scaling up data collection to more than a hundred robots, even more factors need to be considered.

In the rest of this blog, we will share what we have learned from building this large-scale end-to-end system for our GO-1 model.

The Anatomy of Manipulation Data Quality

From Demonstration to Execution: Measuring Data Consistency

When collecting manipulation data, our key goal is to make sure the demonstrations are reliable. But how do we actually check the quality of the data? The first point is to replay it on the robot with the same initial setup—same robot state, same objects, and same environment—and see if the execution matches the demonstration. We call this collection-execution consistency.

Take a simple example: a screw placed on top of a water bottle. Using teleoperation, we can control the robot arm to pick up the screw. Once this is recorded, we replay the actions while keeping the screw and bottle in the same position to test whether the robot can consistently complete the task.

Of course, robots aren't perfect. Because of differences in their built-in controllers and software, the arm might show grasping errors anywhere from 1 mm to 10 mm. You'll notice this if the robot drops the screw or if the screw ends up off-center in the gripper. These errors highlight the gap between what you demonstrated and what the robot actually executes. And if you train a VLA model with data that has these kinds of errors, it will likely struggle to perform the task, even if the model itself is strong and well trained.

Now, things get even trickier when you scale up. Imagine collecting data with dozens or even hundreds of robots at the same time, like we do in our data collection factory. In this case, it's not enough for data to work on the robot it was collected from, you also need it to work across different robots. That way, all the data can be treated as one big, unified dataset, rather than being tied to a specific machine. This cross-robot consistency not only boosts scalability but also makes it possible to evaluate models on any robot in the fleet.

Data Quality Issues in VLA Training: A Case Study

Successful data collection doesn't guarantee effective model performance. The video above demonstrates a seemingly successful data collection scenario from a supermarket checkout scanning task that contains two critical flaws impacting model performance. First, static frames at trajectory beginnings teach the model to remain motionless at task initiation, causing it to get "stuck" in the initial state during inference. Second, temporal state ambiguity occurs when the robot receives identical visual feedback after scanning different objects, creating a state aliasing problem where the model cannot track task progress or distinguish which objects have been processed. These issues exemplify how raw demonstration data, even from successful completions, requires careful preprocessing to avoid learning undesirable behaviors that lead to deployment failures.

A Better Data Collection Paradigm

We discover a powerful approach that dramatically improves training data efficiency through human-in-the-loop collaboration. Here's the problem we notice: traditional robotic data collection is incredibly wasteful. When you record a 30-second "pick-and-place" task, you end up with hundreds of nearly identical frames where the robot is barely moving, creating training samples that teach the model very little. It's like having a textbook where every page says almost the same thing.

Our solution is called Adversarial Data Collection (ADC), and it works like this: instead of one person quietly demonstrating a task, we get two people working together. As shown in the video, one person operates the robot as usual, while a second "adversarial" operator actively tries to make things harder by moving objects around, changing lighting, or even switching the task instruction mid-demonstration (imagine going from "grasp the kiwi" to "pick up the orange" halfway through). This forces the main operator to constantly adapt and recover, packing way more useful learning experiences into each single demonstration. The result? Every frame becomes meaningful, and our models learn to handle real-world chaos much better.

Best Practices for Training Vision-Language-Action Models

Cross-Embodiment Transfer: Do We Really Need Multi-Embodiment Pre-Training Data?

Building truly foundational robotic models means they should work across different robot bodies, but do we really need to train on multiple robot types from the start? The robotics community typically assumes yes, but we had a different hypothesis. Since robots with different shapes can often produce similar behaviors when their end-effectors follow the same path in space, we wonder: what if a model trained on just one robot could easily transfer to others? To test this, we train our model exclusively on the AgiBot G1 and then evaluate it across various simulated and real-world platforms. The results are remarkable, our single-embodiment pretrained model adapts well and even shows better scaling properties during fine-tuning than models pre-trained on diverse robot datasets. The videos below demonstrate this with one of robotics' most challenging tasks: cloth folding. Using fewer than 200 folding demonstrations, our pre-trained model successfully transfers to entirely new robot embodiments, proving that single-embodiment pre-training can be a viable path to cross-embodiment generalization without the complexity of multi-robot training.

Action Space Design for Vision-Language-Action Models

The action space defines the coordinates in which robots operate. Traditional robot controllers often use the end-effector (EEF) pose, measured relative to the robot's chest or base and compared to the previous frame. In the VLA era, however, a more direct approach is to control the arm motors through their joint angles. There are also multiple ways to design the learning objectives for a model. For example, predicting actions relative to the last frame, or relative to the first frame in an action chunk (as in pi0). From our experiments, we've seen that strong models can easily adapt to different action spaces, even for dexterous manipulation tasks like cloth folding. In fact, they can learn effectively even when the pre-training and fine-tuning stages use different action spaces. To keep things simple for users, we choose to adopt absolute joint space in our open-source model. The key takeaway here is that the robot must execute the actions predicted by the model correctly. Since some robot controllers operate in different coordinate systems, a coordinate transformation may be needed to ensure everything lines up properly.

The Hidden Challenge of Expert Diversity

Here's something most people don't think about when collecting robot training data: different human operators have completely different styles. Unlike text or image datasets scraped from the internet, robotic demonstrations are incredibly sensitive to who's controlling the robot. Some operators move fast, others move slowly; some take direct paths, others take roundabout routes. We call this "expert diversity," and it creates a fascinating problem. As shown in the figure above, this diversity shows up in two ways: spatial multimodality (different trajectory paths) and velocity multimodality (different execution speeds). Here's the key insight—these two types of variation have opposite effects on learning. Spatial variations are actually good because they represent different valid strategies for completing tasks, but velocity variations are just noise that makes training harder. So we developed a velocity model in our improved GO-1-Pro model that acts like a smart filter: it removes the unhelpful speed variations while keeping the useful path variations. This simple insight led to significant performance improvements, highlighting that sometimes the biggest breakthroughs come from understanding your data better, not just building bigger models.

Establishing Standards for Reliable Manipulation Evaluation

Step-by-Step VLA Model Validation

Now that we have a trained VLA model, it's time for evaluation. But don't rush straight into deploying it on a real robot. A good first step is to run an open-loop test, which validates whether the model has properly fit the fine-tuning data. If the model fails here, it usually points to issues in your pipeline, such as problems with the dataset, dataloader, or training process. Fix them before moving forward.

Once the model passes the open-loop test, you can deploy it to the robot and begin real-world testing. As an extra precaution, it's also a good idea to first replay previously collected data on the robot to confirm that all hardware is functioning as expected.

The Critical Need for Standardized Testing

Here's a problem that keeps robotics researchers up at night: the random noise in your testing setup is often larger than the actual improvements you're trying to measure. You spend weeks developing what you think is a breakthrough, but when you test it on real hardware, the measurement uncertainty is so high that you can't tell if your method actually works. The same model tested in the morning versus afternoon can show completely different success rates just because the lighting changed or someone moved an object slightly.

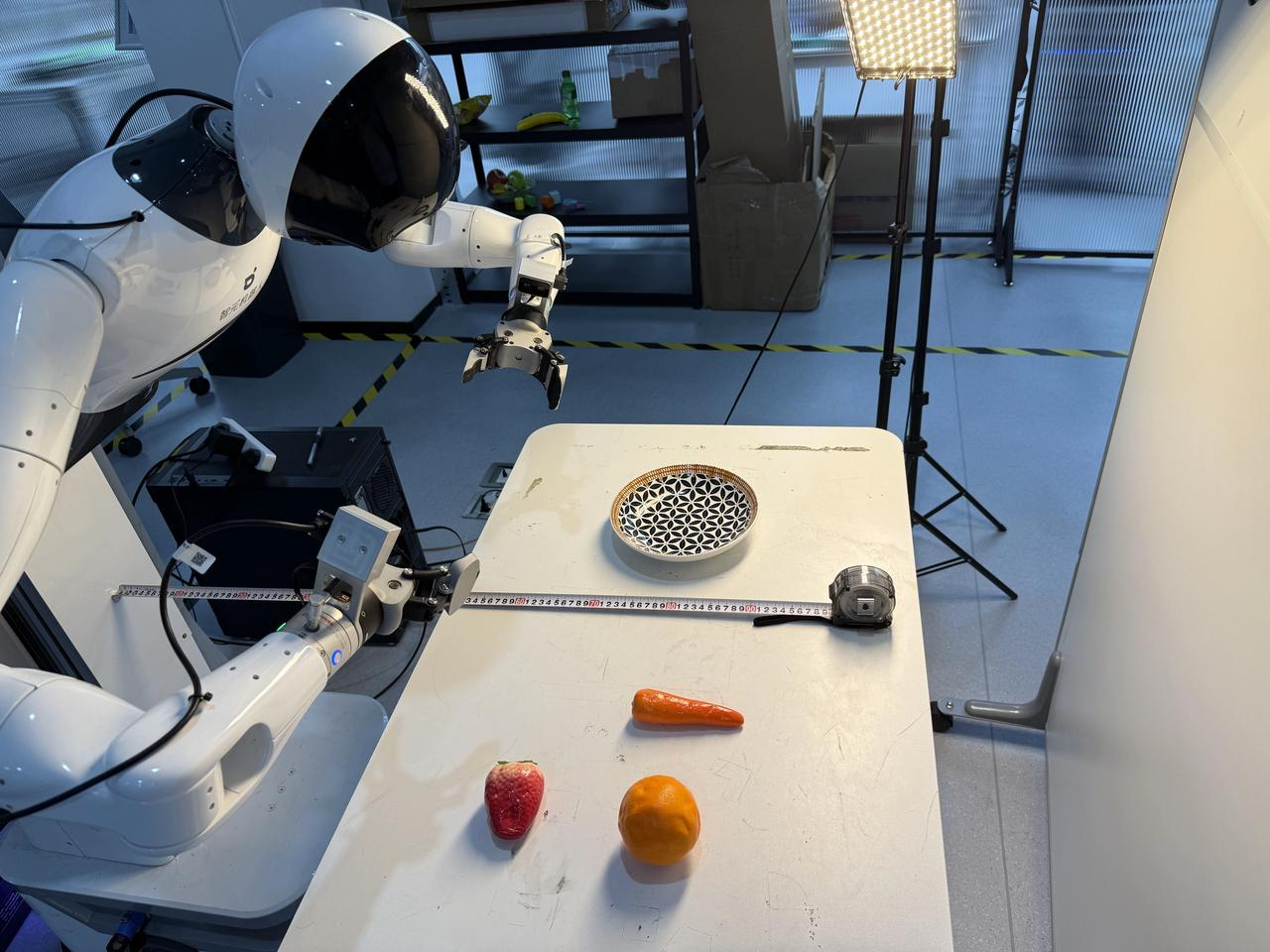

To combat this, we've become obsessive about standardization. As you can see in our setup, we use precise rulers to measure exact object placement, ensuring every strawberry, orange, and carrot sits in the same spot for every trial. We've installed controlled lighting to eliminate natural variations throughout the day. It might look like overkill, but when your testing noise is 5% and your algorithmic improvement is only 2%, this level of precision becomes critical for drawing any meaningful conclusions.

Bottom Line

Building GO-1 has been a journey filled with unexpected challenges and hard-won insights. While the robotics community often focuses on algorithmic breakthroughs, we've learned that the devil truly lies in the details—from data quality and pipeline consistency to evaluation standardization. These "bitter lessons" might not make for flashy conference papers, but they're the foundation upon which reliable robotic systems are built. As we open-source GO-1, we hope these practical insights help other researchers avoid the pitfalls we encountered and accelerate progress toward truly capable robotic foundation models.

- Code: github.com/OpenDriveLab/agibot-world

- Model: huggingface.co/agibot-world/GO-1

- Papers:

This article is written by Modi Shi, Yuxiang Lu, Huijie Wang, Shaoze Yang.

This project is accomplished by these contributors.