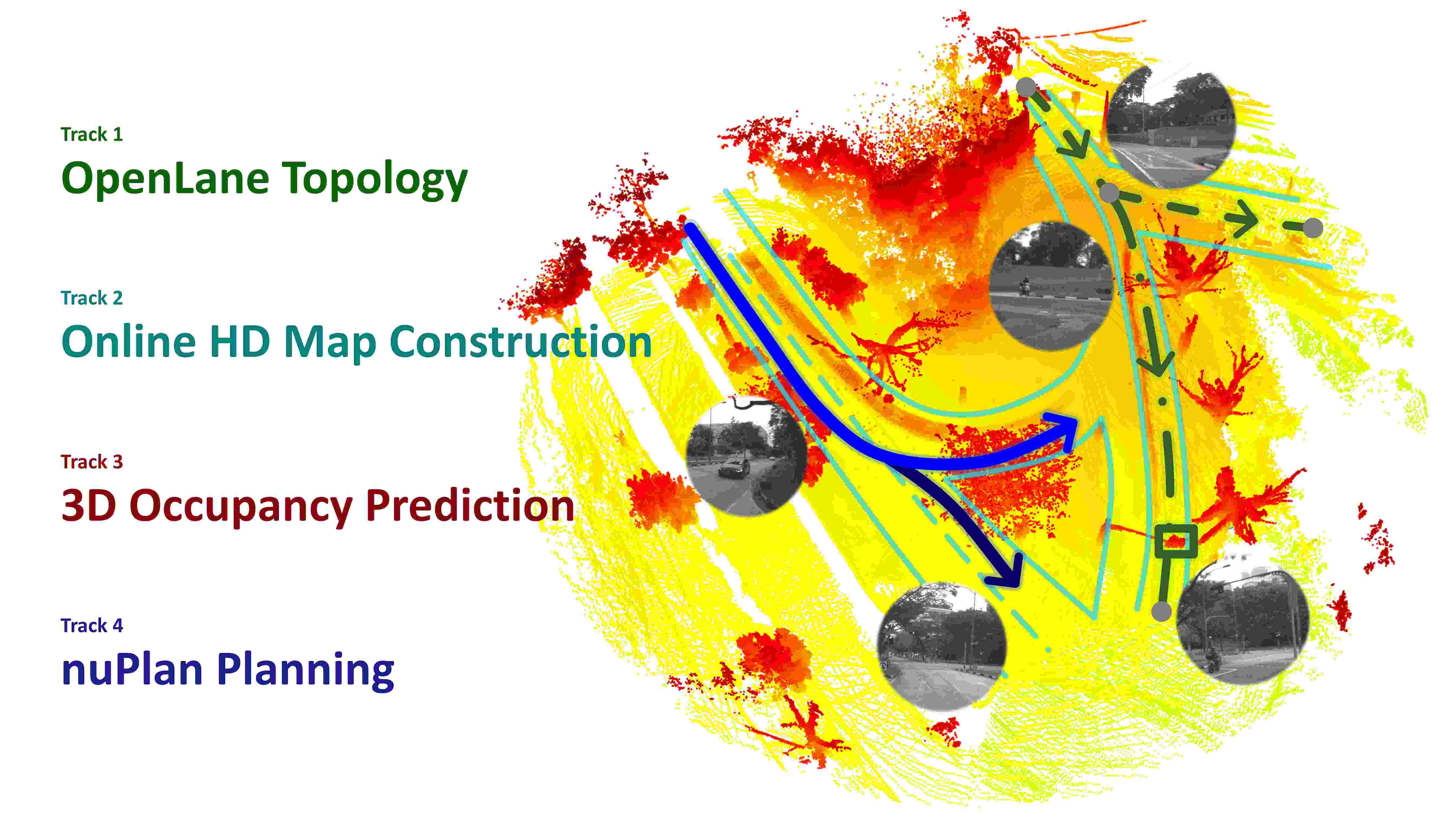

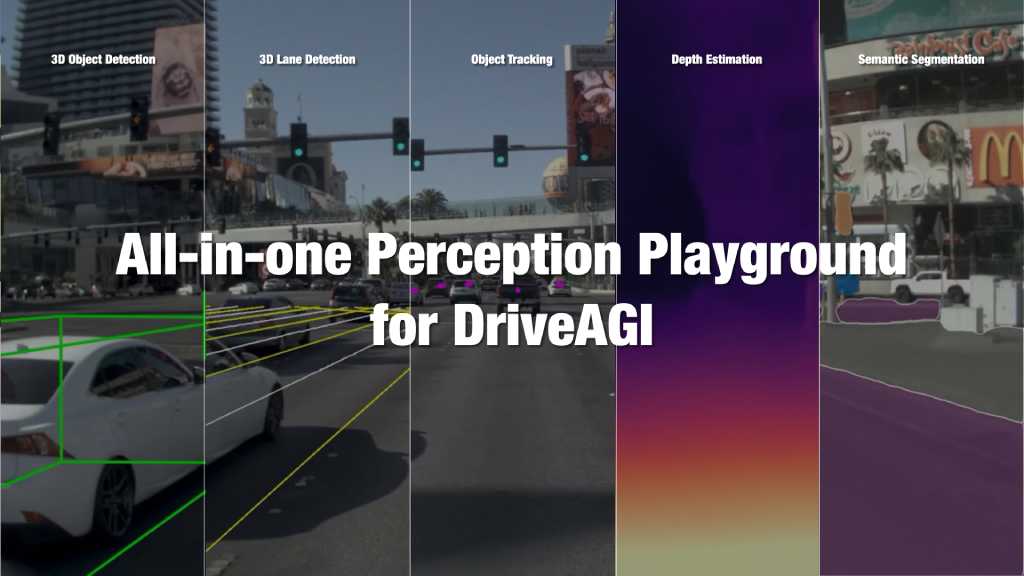

The field of autonomous driving (AD) is rapidly advancing, and while cutting-edge algorithms remain a crucial component, the emphasis on achieving high mean average precision (mAP) for object detectors or conventional segmentation for lane recognition is no longer paramount. Rather, we posit that the future of AD algorithms lies in the integration of perception and planning. In light of this, we propose four newly curated challenges that embody this philosophy.

The Autonomous Driving Challenge at CVPR 2023 just wrapped up! We have witnessed an intensive engagement from the community. Numerous minds from universities and corporations, including China, Germany, France, Singapore, United States, United Kingdom, etc., join to tackle the challenging tasks for autonomous driving. With over 270 teams from 15 countries (regions), the challenge has been a true showcase of global talent and innovation. Over the course of 2,300 submissions, the top spot has been fiercely contested. We received a few inquiries on the eligibility, challenge rules, technical reports. Rest assured that all concerns have been appropriately addressed. The fairness and integrity of the Challenge has always been our highest priority.

| Rank | Country / Region | Institution | OLS (primary) | Team Name | $\text{DET}_{l}$ | $\text{DET}_{t}$ | $\text{TOP}_{ll}$ | $\text{TOP}_{lt}$ |

|---|---|---|---|---|---|---|---|---|

| Page of 30 | ||||||||

Innovation Award goes to "PlatypusWhisperers" for

Innovation Award goes to "PlatypusWhisperers" for

If you use the challenge dataset in your paper, please consider citing the following BibTex:

The OpenLane-V2 dataset* is the perception and reasoning benchmark for scene structure in autonomous driving. Given multi-view images covering the whole panoramic field of view, participants are required to deliver not only perception results of lanes and traffic elements but also topology relationships among lanes and between lanes and traffic elements simultaneously.

The primary metric is OpenLane-V2 Score (OLS), which comprises evaluations on three sub-tasks. On the website, we provide tools for data access, training models, evaluations, and visualization. To submit your results on EvalAI, please follow the submission instructions.

Outstanding Champion Outstanding Champion

|

USD $15,000 |

Honorable Runner-up Honorable Runner-up

|

USD $5,000 |

Innovation Award Innovation Award

|

USD $5,000 |

[email protected]

| Rank | Country / Region | Institution | mAP (primary) | Team Name | Ped Crossing | Divider | Boundary |

|---|---|---|---|---|---|---|---|

| Page of 30 | |||||||

Innovation Award goes to "MACH" for

Innovation Award goes to "MACH" for

If you use the challenge dataset in your paper, please consider citing the following BibTex:

Compared to conventional lane detection, the constructed HD map provides more semantics information with multiple categories. Vectorized polyline representations are adopted to deal with complicated and even irregular road structures. Given inputs from onboard sensors (cameras), the goal is to construct the complete local HD map.

The primary metric is mAP based on Chamfer distance over three categories, namely lane divider, boundary, and pedestrian crossing. Please refer to our GitHub for details on data and evaluation. Submission is conducted on EvalAI.

Outstanding

Champion Outstanding

Champion |

USD $15,000 |

Honorable Runner-up Honorable Runner-up

|

USD $5,000 |

Innovation Award Innovation Award

|

USD $5,000 |

[email protected]

| Rank | Country / Region | Institution | mIoU (primary) | Team Name |

|---|---|---|---|---|

| Page of 30 | ||||

Innovation Award goes to "NVOCC" for

Innovation Award goes to "NVOCC" for

Innovation Award goes to "occ_transformer" for

Innovation Award goes to "occ_transformer" for

If you use the challenge dataset in your paper, please consider citing the following BibTex:

Unlike previous perception representations, which depend on predefined geometric primitives or perceived data modalities, occupancy enjoys the flexibility to describe entities in arbitrary shapes. In this track, we provide a large-scale occupancy benchmark. Given multi-view images covering the whole panoramic field of view, participants are needed to provide the occupancy state and semantics of each voxel in 3D space for the complete scene.

The primary metric of this track is mIoU. On the website, we provide detailed information for the dataset, evaluation, and submission instructions. The test server is hosted on EvalAI.

Outstanding Champion Outstanding Champion

|

USD $15,000 |

Honorable Runner-up Honorable Runner-up

|

USD $5,000 |

Innovation Award * 2 Innovation Award * 2

|

USD $5,000 |

[email protected]

[email protected]

| Rank | Country / Region | Institution | Overall Score (primary) | Team Name | CH1 Score | CH2 Score | CH3 Score |

|---|---|---|---|---|---|---|---|

| Page of 30 | |||||||

Innovation Award goes to "AID" for

Innovation Award goes to "AID" for

![]() Honorable Mention for Innovation Award goes to "raphamas" for

Honorable Mention for Innovation Award goes to "raphamas" for

If you use the challenge dataset in your paper, please consider citing the following BibTex:

Previous benchmarks focus on short-term motion forecasting and are limited to open-loop evaluation. nuPlan introduces long-term planning of the ego vehicle and corresponding metrics. Provided as docker containers, submissions are deployed for simulation and evaluation.

The primary metric is the mean score over three increasingly complex modes: open-loop, closed-loop non-reactive agents, and closed-loop reactive agents. Participants can follow the steps to begin the competition. To submit your results on EvalAI, please follow the submission instructions.

Outstanding

Champion Outstanding

Champion |

USD $10,000 |

Honorable Runner-up

(2nd) Honorable Runner-up

(2nd) |

USD $8,000 |

Honorable Runner-up

(3rd) Honorable Runner-up

(3rd) |

USD $5,000 |

Innovation Award Innovation Award

|

USD $5,000 |

[email protected]

Thanks for your participation! For those who require a certificate for participation, please specify the names of all team members, the institution, the method name (optional), the team name, and the participating track to [email protected].

Only PUBLIC results shown on the leaderboard will be valid. Please ensure your result is made public before the deadline and kept public after the deadline on the leaderboard.

Regarding submissions to all tracks, please make sure the appended information is correct, especially the email address. After submission deadlines, we will ask participants via email to provide further information for qualification and making certificates. Any form of late requests for claiming ownership of a particular submission will not be considered. Incorrect email addresses will lead to disqualification.

The primary objective of this Autonomous Driving Challenge is to facilitate all aspects of autonomous driving.

Despite the current trend toward data-driven research, we strive to provide opportunities for participants without

access to massive data or computing resources.

To this end, we would like to reiterate the following rules:

Leaderboard

Certificates will be provided to all participants.

All publicly available datasets and pretrained weights are allowed, including Objects365, Swin-T, DD3D-pretrained

VoVNet, InternImage, etc.

But the use of private datasets or pretrained weights is prohibited.

Award

To claim a cash award, all participants are required to submit a technical report.

Cash awards for the first three places will be distributed based on the rankings on leaderboards. However,

other factors, such as model sizes and data usage, will be taken into consideration.

As we set up the Innovation Prize to encourage novel and innovative ideas, winners of this award are

encouraged only to use ImageNet and COCO as external data.

The challenge committee reserves all rights for the final explanation of the cash award.

Please refer to rules.