Publication

We position OpenDriveLab as one of the most top research teams around globe, since we've got talented people and published work at top venues.Antonio Loquercio,

Preprint 2026Position Paper

Yihan Hu,

Keyu Li,

Xizhou Zhu,

Siqi Chai,

Senyao Du,

Tianwei Lin,

Lewei Lu,

Qiang Liu,

Jifeng Dai,

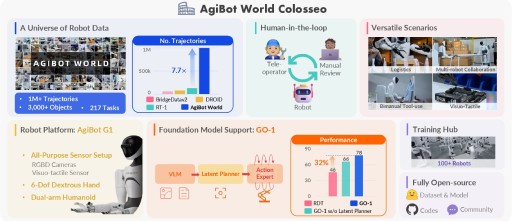

Team AgiBot-World

Bernhard Jaeger,

Yinghui Li,

Di Huang,

Kunyang Lin,

Jinwei Li,

Wencong Zhang,

Tianwei Lin,

Longyan Wu,

Zhizhong Su,

Hao Zhao,

Ya-Qin Zhang,

Xiangyu Yue,

Siqi Liang,

Yuxian Li,

Yukuan Xu,

Yichao Zhong,

Fu Zhang,

Gangcheng Jiang,

Haoguang Mai,

Kaiyang Wu,

Lirui Zhao,

Yibo Yuan

Chiming Liu,

Guanghui Ren,

Di Huang,

Maoqing Yao,

Guanghui Ren,

Maoqing Yao,

Yanjie Zhang,

Delong Li,

Chuanzhe Suo,

Chuang Wang,

Ruoyi Qiao,

Liang Pan,

Haoguang Mai,

Hao Zhao,

Cunyuan Zheng,

Jia Zeng,

Heming Cui,

Maoqing Yao,

Jia Zeng,

Yanchao Yang,

Guyue Zhou,

Heming Cui,

Longyan Wu,

Jieji Ren,

Ran Huang,

Guoying Gu,

Jia Zeng,

Wenke Xia,

Hao Dong,

Haoming Song,

Dong Wang,

Di Hu,

Heming Cui,

Bin Zhao,

Xuelong Li,

Guang Li,

Junli Wang,

Yinfeng Gao,

Zhang Zhang,

Liang Wang,

Hangjun Ye,

Tieniu Tan,

Long Chen,

Haohan Yang,

Ke Guo,

Hongchen Li,

Chen Lv

IEEE-TPAMI 2026

Katrin Renz,

Long Chen,

Yuqian Shao,

Xiangyu Yue,

William Ljungbergh,

Hongzi Zhu,

Christoffer Petersson,

Zhiding Yu,

Shiyi Lan,

Jose M. Alvarez

arXiv 2025

Jun Zhang,

Daniel Dauner,

Marcel Hallgarten,

Xinshuo Weng,

Zhiyu Huang,

Igor Gilitschenski,

Boris Ivanovic,

Marco Pavone,

Bo Dai,

Jia Zeng,

Jun Zhang,

Yanan Sun,

Yang Li,

Jia Zeng,

Huilin Xu,

Pinlong Cai,

Feng Xu,

Lu Xiong,

Jingdong Wang,

Futang Zhu,

Kai Yan,

Chunjing Xu,

Tiancai Wang,

Fei Xia,

Beipeng Mu,

Dahua Lin,

Jia Zeng,

Hang Qiu,

Hongzi Zhu,

Minyi Guo,

Patrick Langechuan Liu,

Jiangwei Xie,

Conghui He,

Zhenjie Yang,

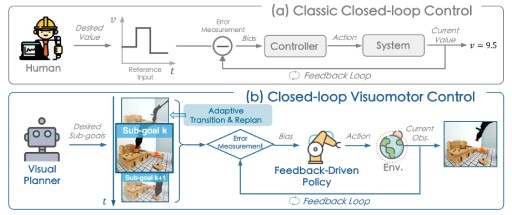

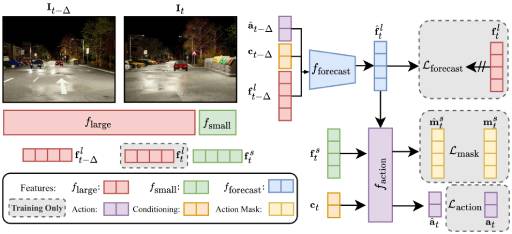

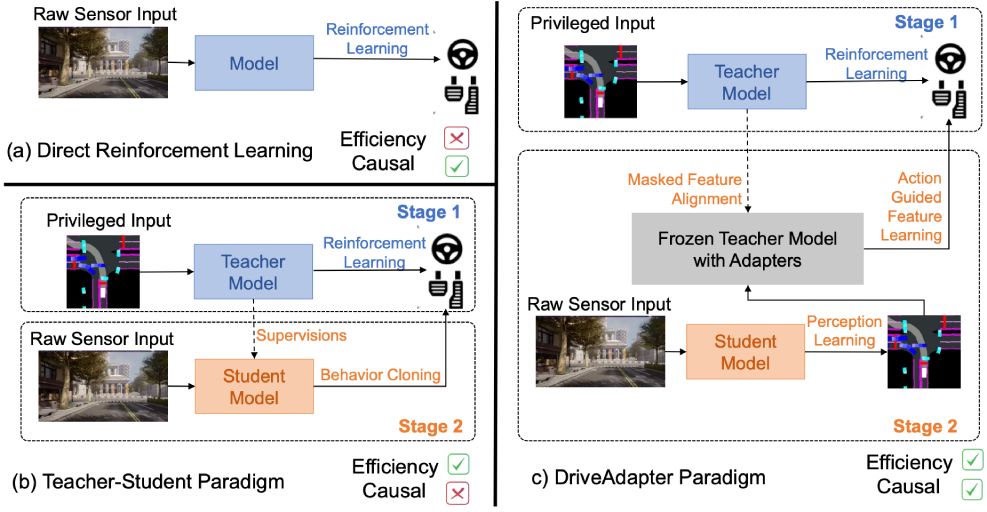

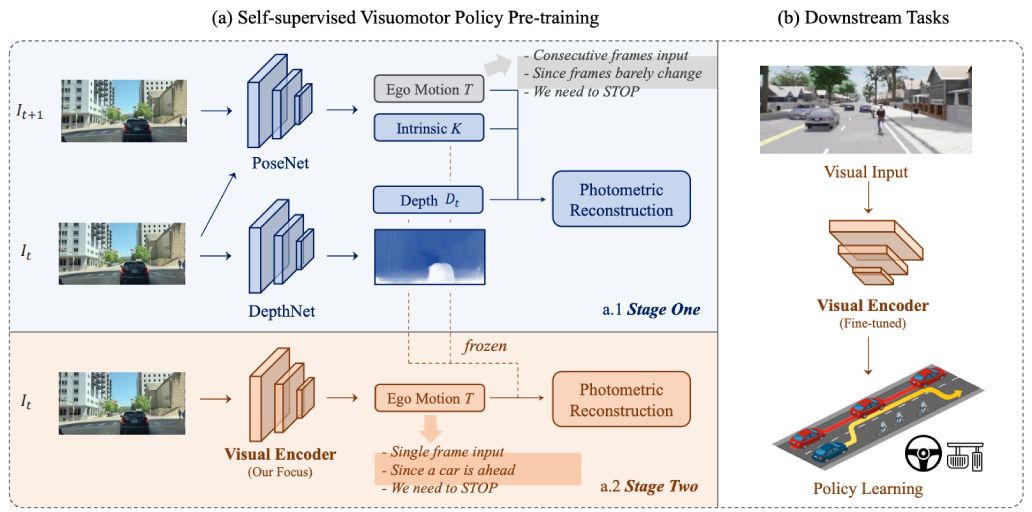

An intuitive and straightforward fully self-supervised framework curated for the policy pre-training in visuomotor driving.

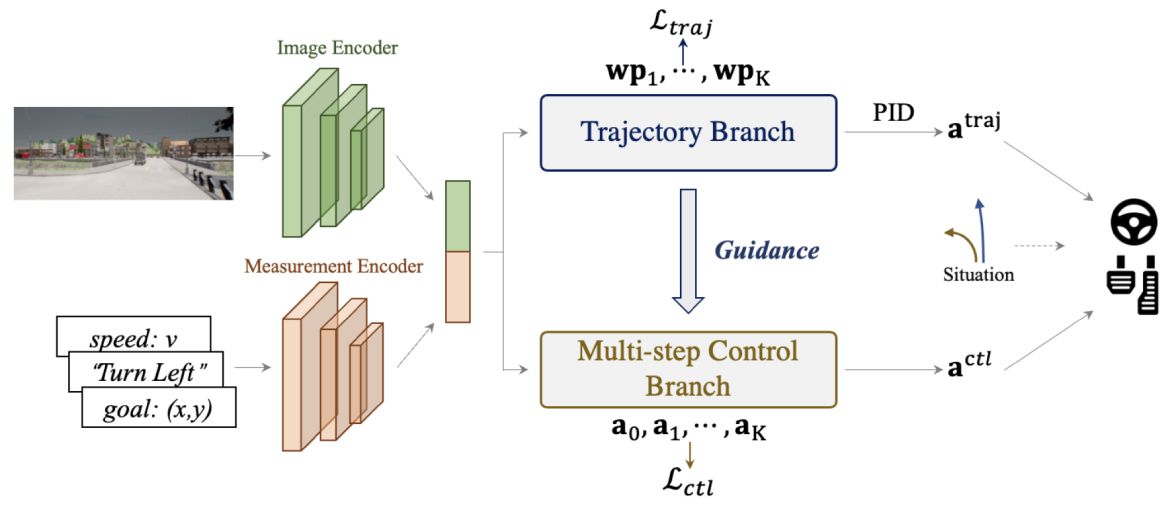

Take the initiative to explore the combination of controller based on a planned trajectory and perform control prediction.

Dacheng Tao

Hongchen Li,

Lei Shi,

Mingyang Shang,

Zengrong Lin,

Gaoqiang Wu,

Zhihui Hao,

Xianpeng Lang,

Jia Hu,

Shihao Li,

Tuo An,

Peng Su,

Boyang Wang,

Haiou Liu,

Chen Lv,

Zhenhua Wu,

Carl Lindström,

Peng Su,

Matthias Nießner,

Peijin Jia,

Kun Jiang,

Haisong Liu,

Yang Chen,

Haiguang Wang,

Jia Zeng,

Limin Wang

Jingdong Wang,

Yang Li,

Zhenbo Liu,

Peijin Jia,

Yuting Wang,

Shengyin Jiang,

Feng Wen,

Hang Xu,

Wei Zhang,

Jifeng Dai,

Lewei Lu,

Jia Zeng,

Hanming Deng,

Hao Tian,

Enze Xie,

Jiangwei Xie,

Yang Li,

Si Liu,

Jianping Shi,

Dahua Lin,

Wenwen Tong,

Tai Wang,

Silei Wu,

Hanming Deng,

Yi Gu,

Lewei Lu,

Dahua Lin,

Shaoshuai Shi,

Shangzhe Di,

Si Liu,

IROS 2023

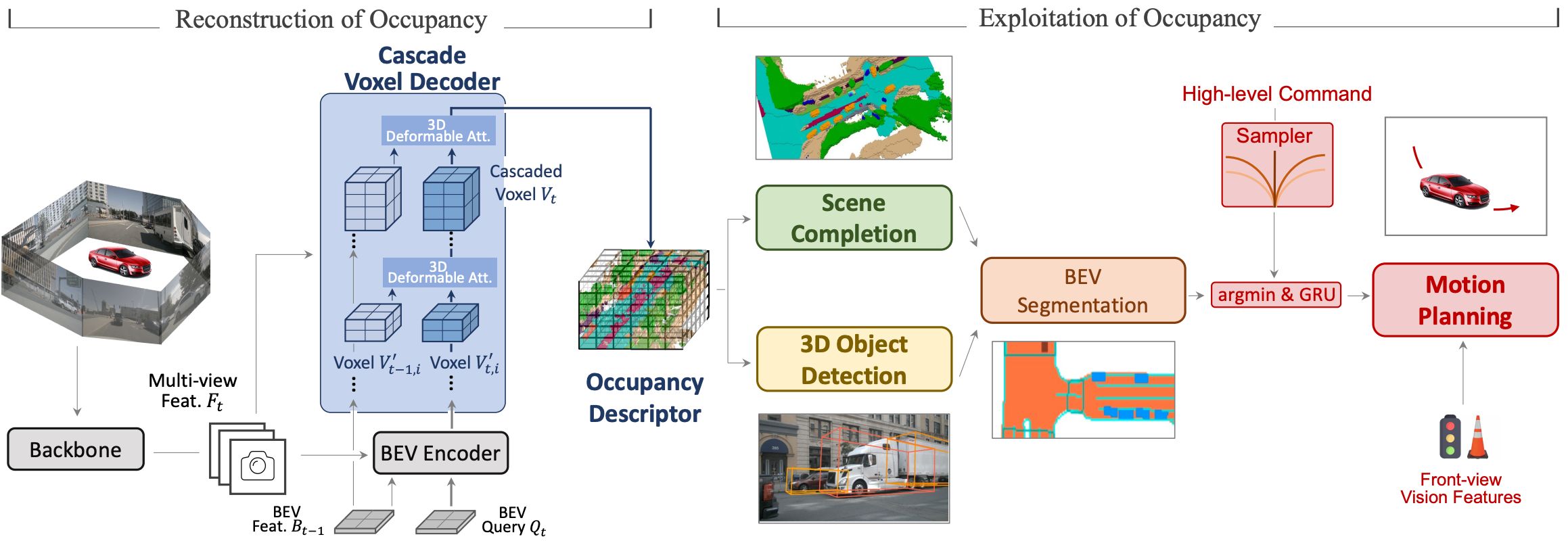

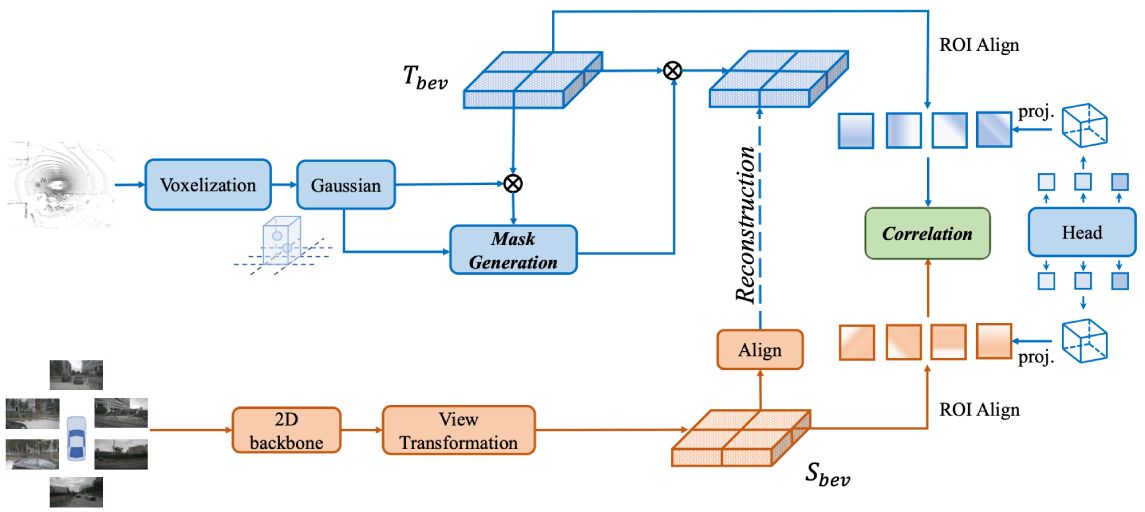

We propose Sparse Dense Fusion (SDF), a complementary framework that incorporates both sparse-fusion and dense-fusion modules via the Transformer architecture.Yu Liu,

Jia Zeng,

Hanming Deng,

Lewei Lu,

CVPR 2023

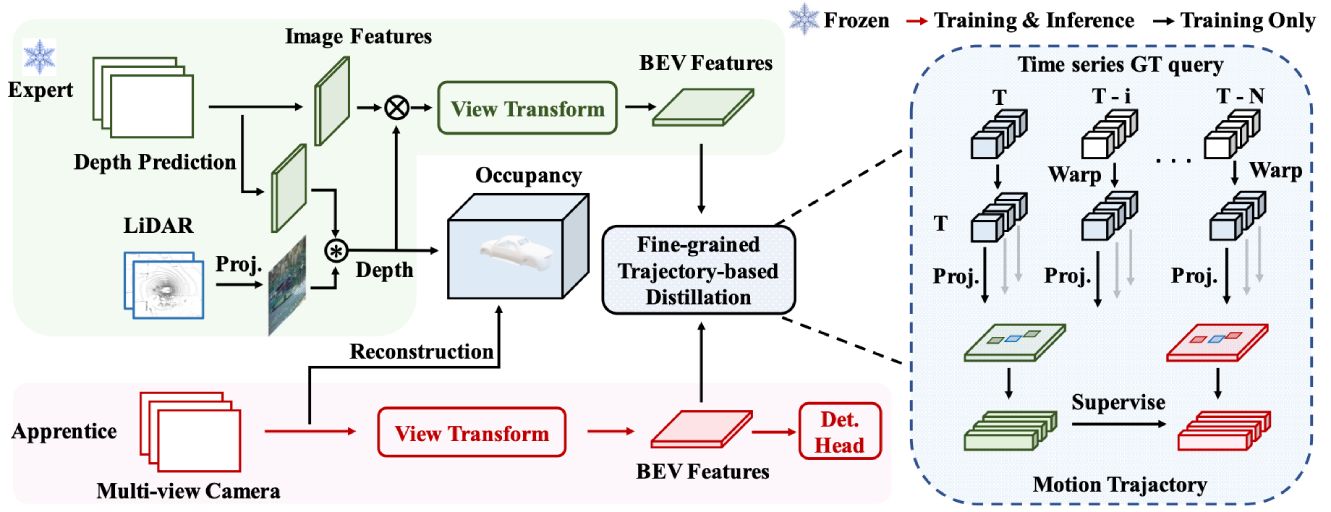

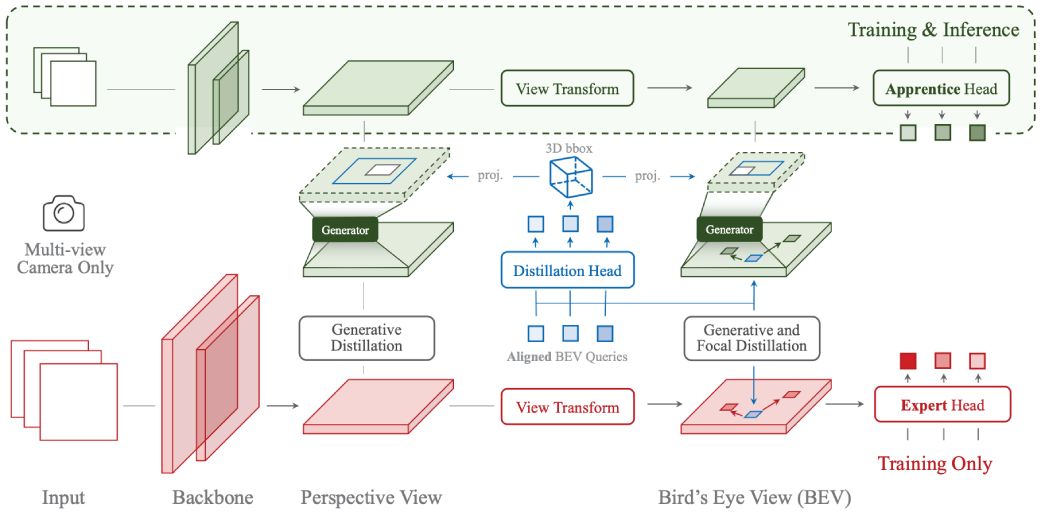

We investigate on how to distill the knowledge from an imperfect expert. We propose FD3D, a Focal Distiller for 3D object detection.Chenyu Yang,

Yuntao Chen,

Hao Tian,

Chenxin Tao,

Xizhou Zhu,

Zhaoxiang Zhang,

Gao Huang,

Lewei Lu,

Jie Zhou,

Jifeng Dai

CVPR 2023 Highlight

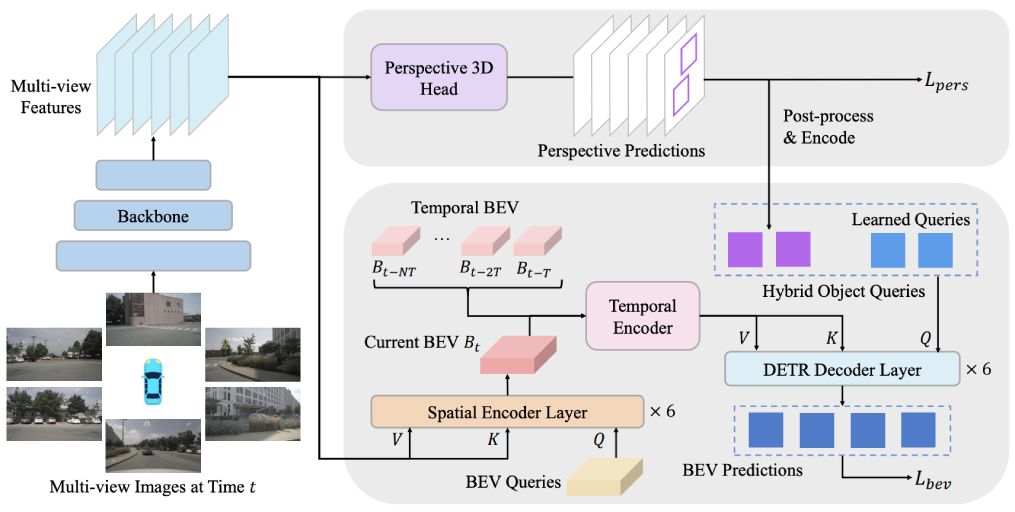

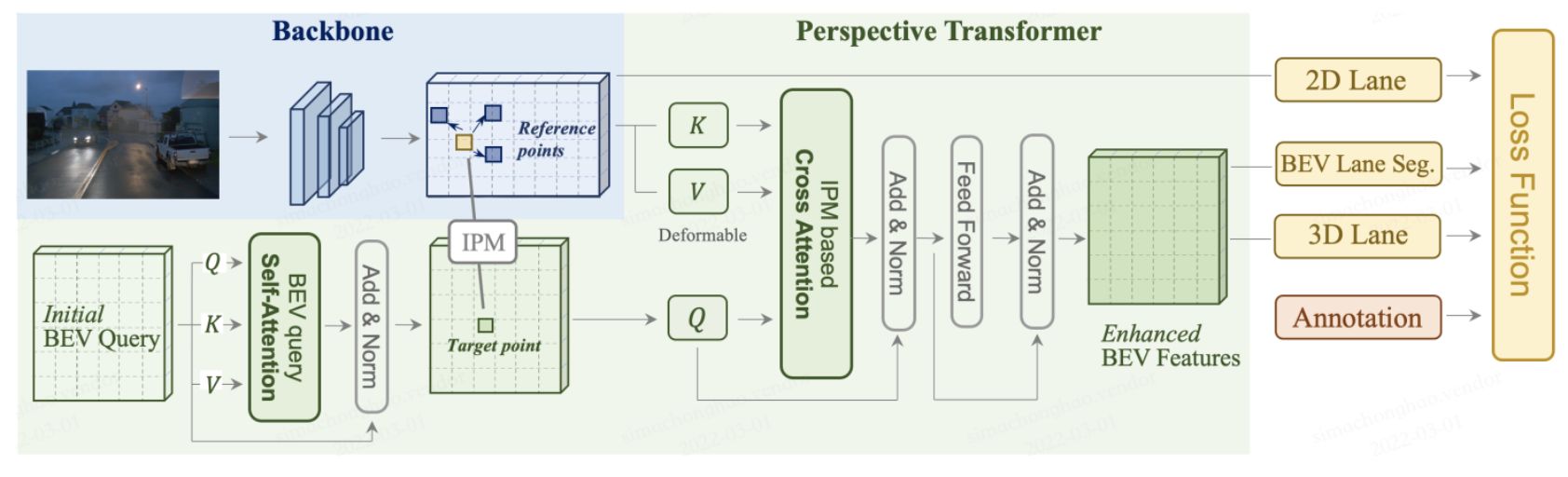

A novel bird's-eye-view (BEV) detector with perspective supervision, which converges faster and better suits modern image backbones.Yang Li,

Shengyin Jiang,

Yuting Wang,

Hang Xu,

Chunjing Xu,

Jia Zeng,

Shengchuan Zhang,

Liujuan Cao,

Rongrong Ji,

arXiv 2023

We propose GAPretrain, a plug-and-play framework that boosts 3D detection by pretraining with spatial-structural cues and BEV representation.Wenwen Tong,

Jiangwei Xie,

Yang Li,

Hanming Deng,

Bo Dai,

Lewei Lu,

Hao Zhao,

PRCV 2024

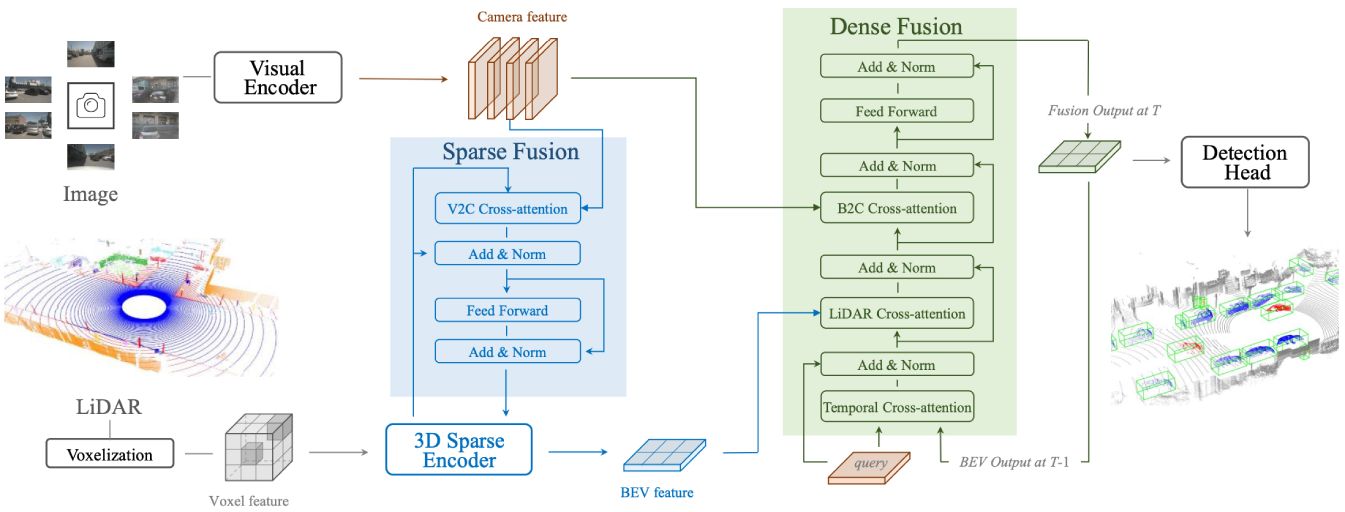

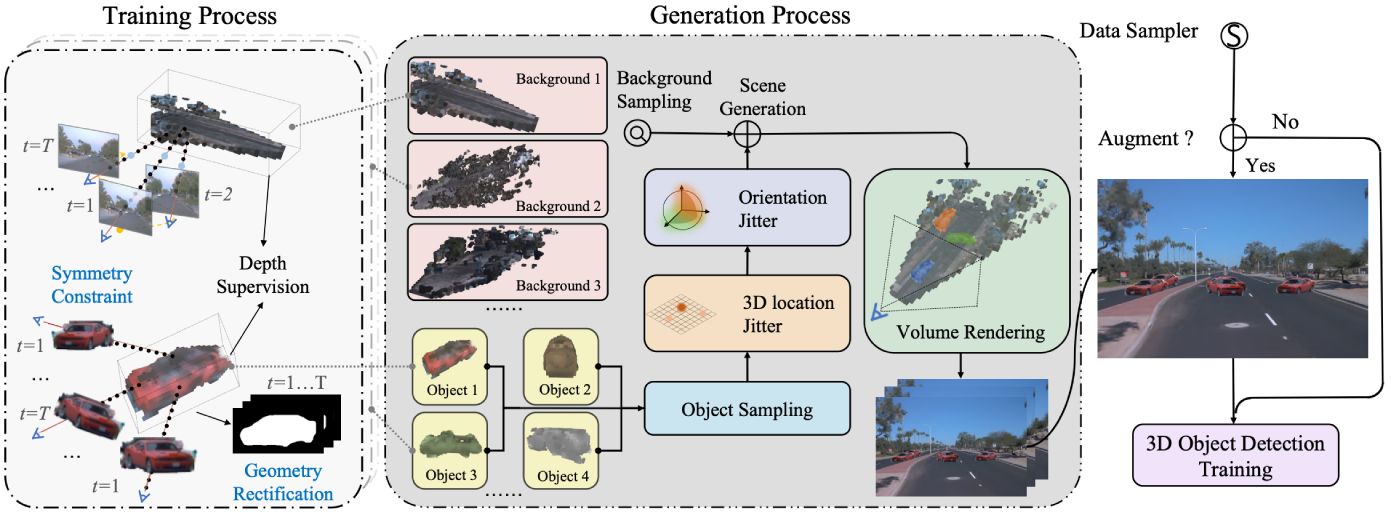

We propose a 3D data augmentation approach termed Drive-3DAug to augment the driving scenes on camera in the 3D space.Jia Zeng,

CoRL 2022

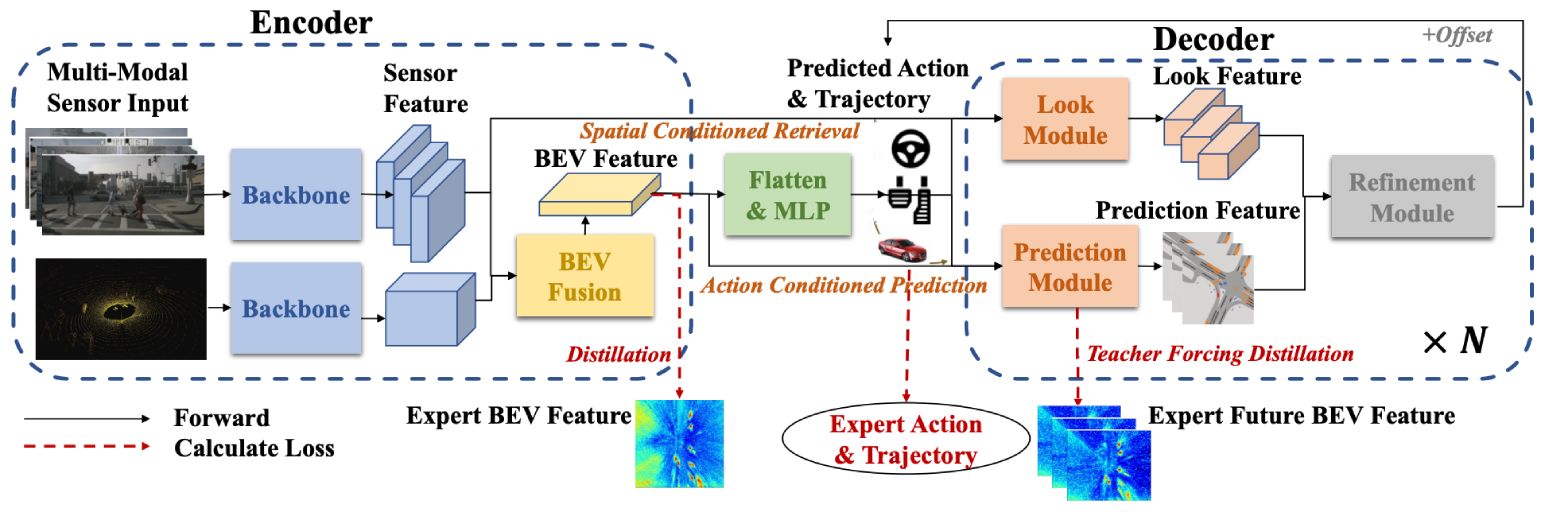

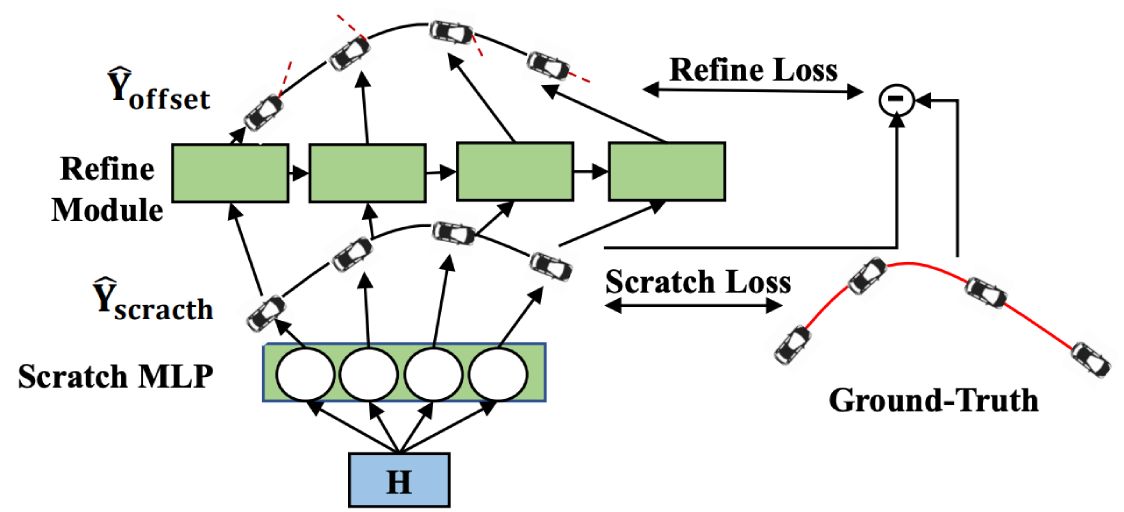

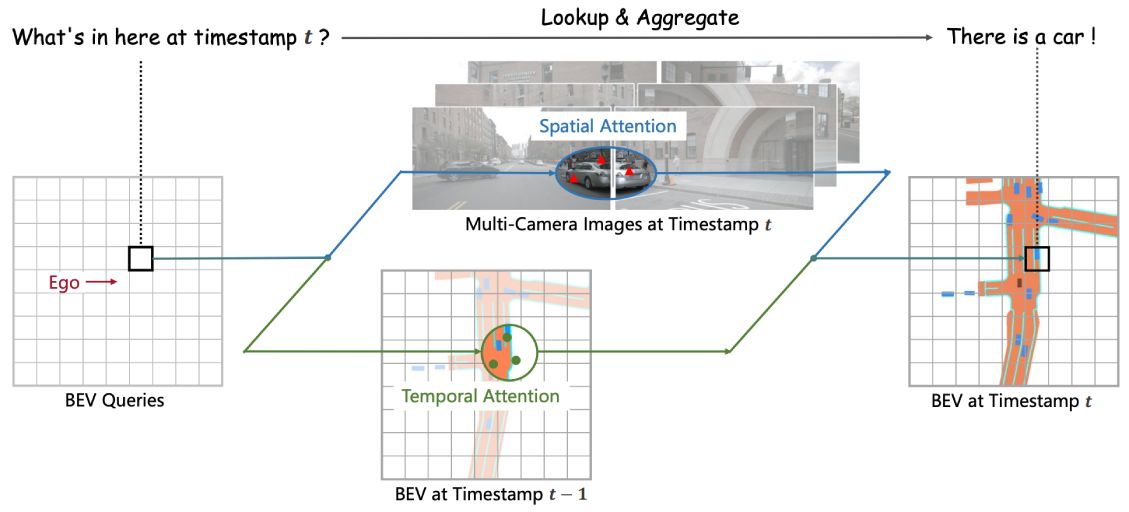

We find taking scratch trajectories generated by MLP as input, a refinement module based on structures with temporal prior, could boost the accuracy.BEVFormer: Learning Bird's-Eye-View Representation From LiDAR-Camera via Spatiotemporal Transformers

Enze Xie,

Tong Lu,

Jifeng Dai

Yang Li,

Zehan Zheng,

Conghui He,

Jianping Shi,

Qingsong Yao,

Yanan Sun,

Renrui Zhang,

Hao Zhao,

Hongzi Zhu,

Yanan Sun,

Fei Xia,

IEEE-TPAMI 2026

Yifei Zhang,

Hao Zhao,

Siheng Chen

Shilin Yan,

Renrui Zhang,

Ziyu Guo,

Wenchao Chen,

Wei Zhang,

Zhongjiang He,

Peng Gao

Peng Gao,

Hao Sun,

Houqiang Li,

Jiebo Luo

Peng Gao,

Renrui Zhang,

Rongyao Fang,

Ziyi Lin,

Hongsheng Li,

Qiao Yu

Shaofeng Zhang,

Lyn Qiu,

Feng Zhu,

Hengrui Zhang,

Rui Zhao,

Xiaokang Yang

CVPR 2022