Autonomous Driving

enables vehicles to perceive, reason, and act safely in complex environments. We focus on whole-scene perception, critical data generation, and end-to-end decision-making. Our goal is to build a unified and scalable autonomy pipeline grounded in large-scale real-world driving data and efficient world representations.For a complete list of publications, please see here.- SimScale: Learning to Drive via Real-World Simulation at ScaleA scalable sim-real learning framework that synthesizes high-fidelity driving data and cboosts end-to-end planners to achieve robust, generalizable autonomy with principled scaling insights.

- ReSim: Reliable World Simulation for Autonomous DrivingReSim is a driving world model that enables Reliable Simulation of diverse open-world driving scenarios under various actions, including hazardous non-expert ones. A Video2Reward model estimates the reward from ReSim's simulated future.

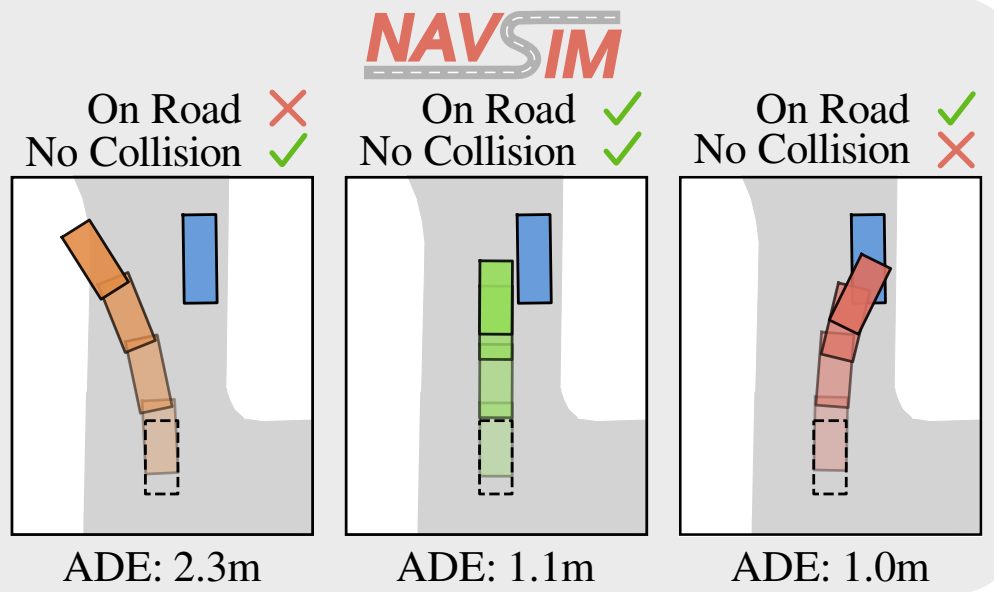

- NAVSIM: Data-Driven Non-Reactive Autonomous Vehicle Simulation and BenchmarkingData-Driven Non-Reactive Autonomous Vehicle Simulation and Benchmarking.

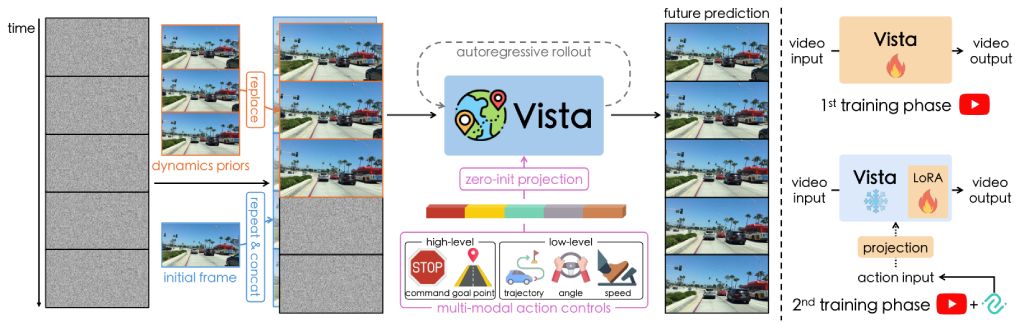

- Vista: A Generalizable Driving World Model with High Fidelity and Versatile ControllabilityA generalizable driving world model with high-fidelity open-world prediction, continuous long-horizon rollout, and zero-shot action controllability.

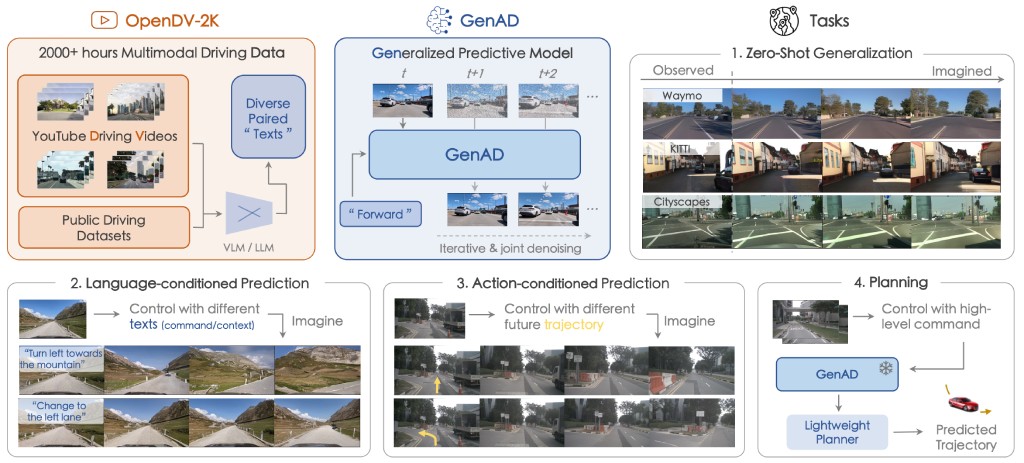

- Generalized Predictive Model for Autonomous DrivingWe aim to establish a generalized video prediction paradigm for autonomous driving by presenting the largest multimodal driving video dataset to date, OpenDV-2K, and a generative model that predicts the future given past visual and textual input, GenAD.

- Visual Point Cloud Forecasting enables Scalable Autonomous DrivingA new self-supervised pre-training task for end-to-end autonomous driving, predicting future point clouds from historical visual inputs, joint modeling the 3D geometry and temporal dynamics for simultaneous perception, prediction, and planning.

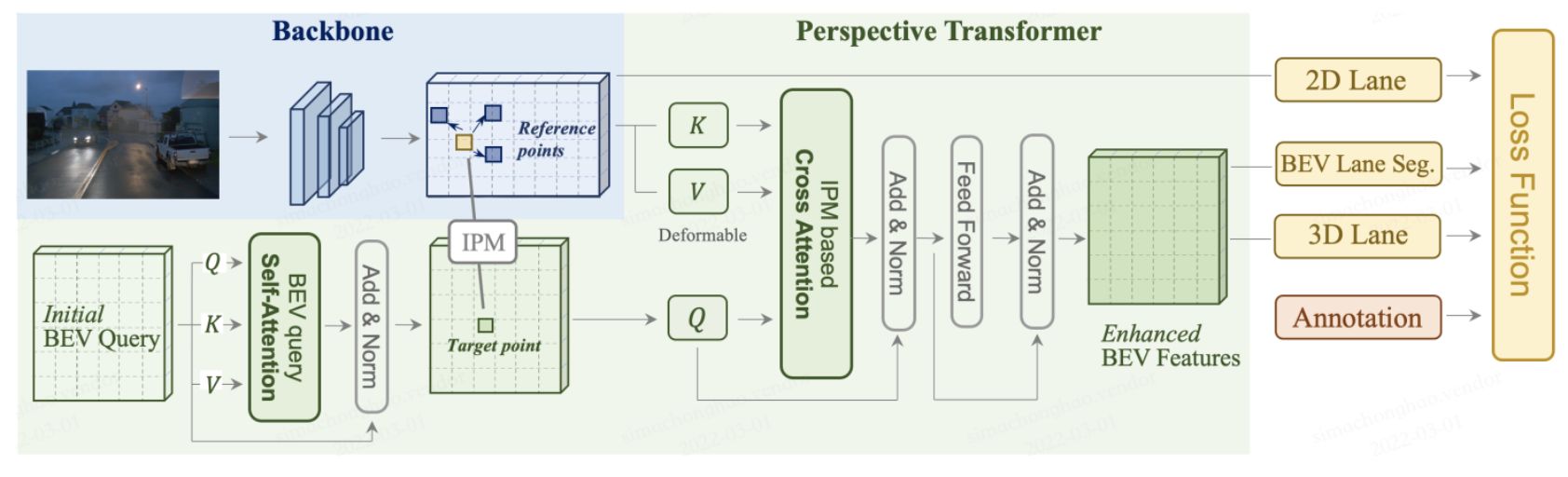

- LaneSegNet: Map Learning with Lane Segment Perception for Autonomous DrivingWe advocate Lane Segment as a map learning paradigm that seamlessly incorporates both map geometry and topology information.

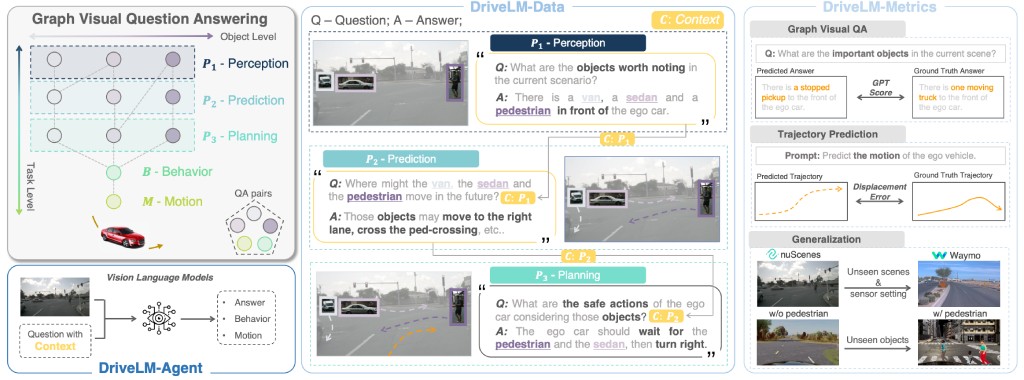

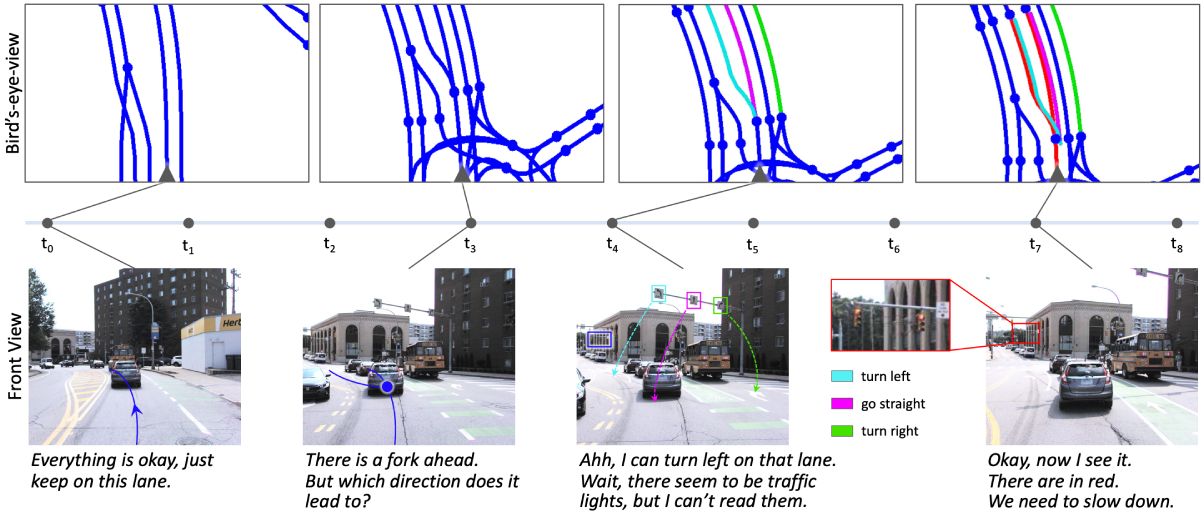

- DriveLM: Driving with Graph Visual Question AnsweringUnlocking the future where autonomous driving meets the unlimited potential of language.

- DriveAdapter: Breaking the Coupling Barrier of Perception and Planning in End-to-End Autonomous DrivingA new paradigm for end-to-end autonomous driving without causal confusion issue.

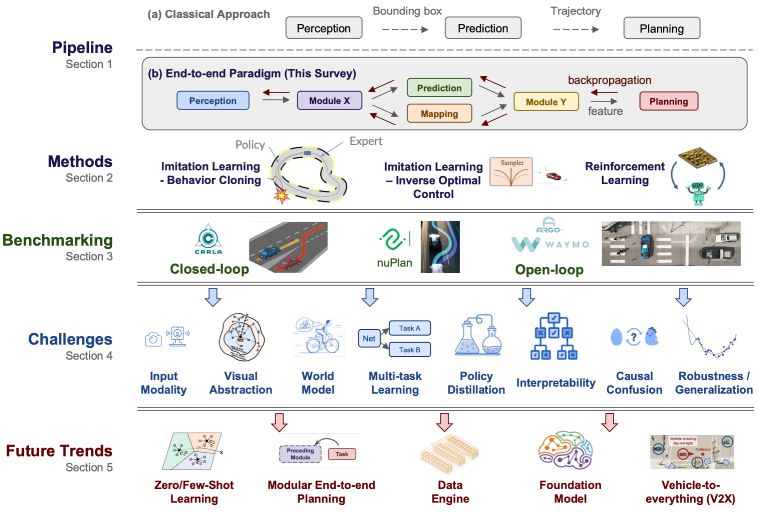

- End-to-End Autonomous Driving: Challenges and FrontiersIn this survey, we provide a comprehensive analysis of more than 270 papers on the motivation, roadmap, methodology, challenges, and future trends in end-to-end autonomous driving.

- Scene as OccupancyOccupancy serves as a general representation of the scene and could facilitate perception and planning in the full-stack of autonomous driving.

- Think Twice before Driving: Towards Scalable Decoders for End-to-End Autonomous DrivingA scalable decoder paradigm that generates the future trajectory and action of the ego vehicle for end-to-end autonomous driving.

- OpenLane-V2: A Topology Reasoning Benchmark for Unified 3D HD MappingThe world's first perception and reasoning benchmark for scene structure in autonomous driving.

- Graph-based Topology Reasoning for Driving ScenesA new baseline for scene topology reasoning, which unifies heterogeneous feature learning and enhances feature interactions via the graph neural network architecture and the knowledge graph design.

- Policy Pre-Training for End-to-End Autonomous Driving via Self-Supervised Geometric ModelingAn intuitive and straightforward fully self-supervised framework curated for the policy pre-training in visuomotor driving.

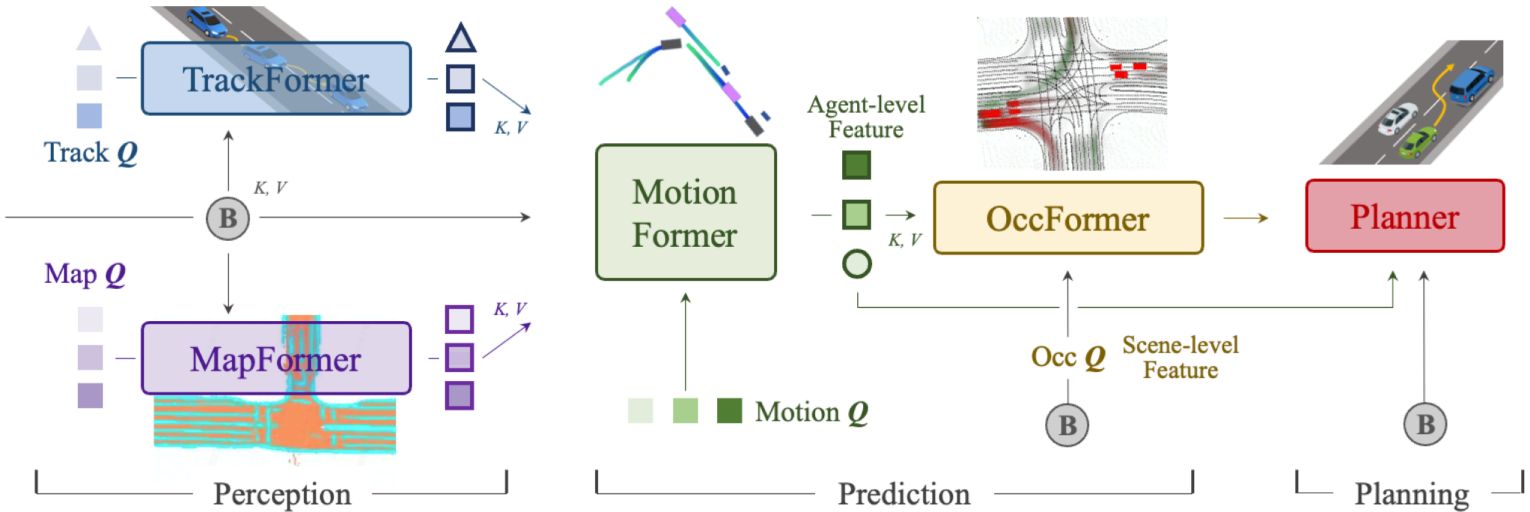

- Planning-oriented Autonomous DrivingUniAD: The first comprehensive framework that incorporates full-stack driving tasks.

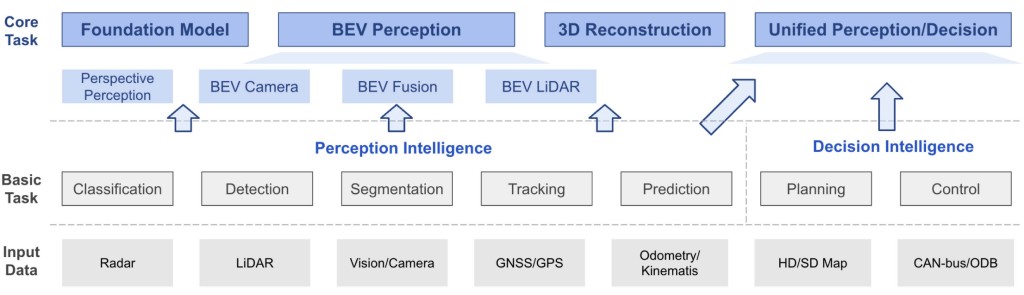

- Delving into the Devils of Bird's-Eye-View Perception: A Review, Evaluation and RecipeWe review the most recent work on BEV perception and provide analysis of different solutions.

- Towards Capturing the Temporal Dynamics for Trajectory Prediction: a Coarse-to-Fine ApproachWe find taking scratch trajectories generated by MLP as input, a refinement module based on structures with temporal prior, could boost the accuracy.

- ST-P3: End-to-End Vision-Based Autonomous Driving via Spatial-Temporal Feature LearningA spatial-temporal feature learning scheme towards a set of more representative features for perception, prediction and planning tasks simultaneously.

- Trajectory-guided Control Prediction for End-to-end Autonomous Driving: A Simple yet Strong BaselineTake the initiative to explore the combination of controller based on a planned trajectory and perform control prediction.

- HDGT: Heterogeneous Driving Graph Transformer for Multi-Agent Trajectory Prediction via Scene EncodingHDGT formulates the driving scene as a heterogeneous graph with different types of nodes and edges.

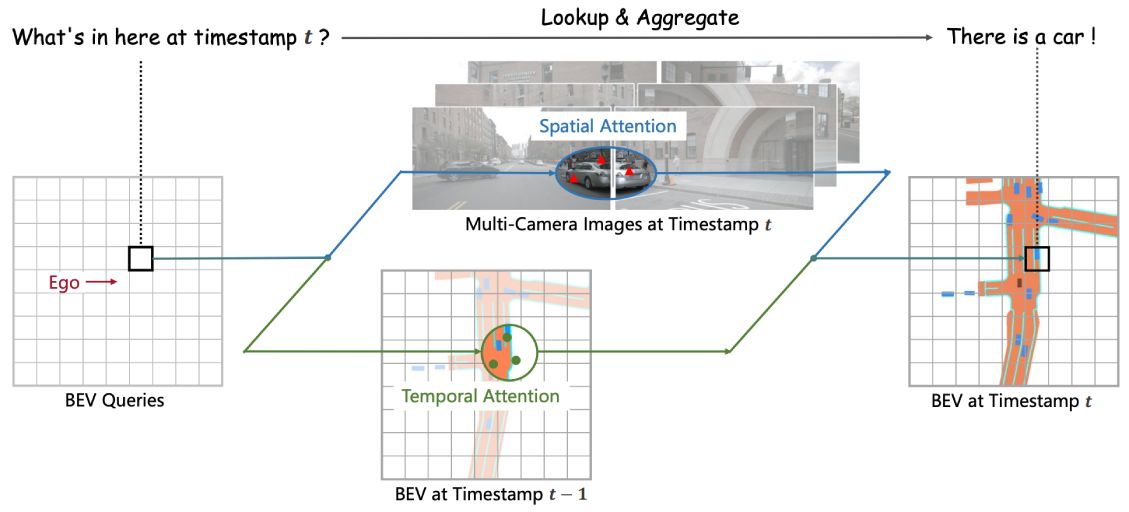

- BEVFormer: Learning Bird's-Eye-View Representation From LiDAR-Camera via Spatiotemporal TransformersA paradigm for autonomous driving that applies both Transformer and Temporal structure to generate BEV features.